Amazon Web Services (AWS), a comprehensive, evolving cloud computing platform provided by Amazon, sees itself helping drive the impending widespread adoption of Machine Learning (ML) and Artificial Intelligence (AI) applications, and the company intends to do this feat by making it easy, practical, and cost-effective for customers to use generative AI in their businesses.

The plan is to introduce innovations across all three layers of the ML stack, which includes infrastructure, ML tools, and purpose-built AI services.

Olivier Klein, Chief Technologist in Asia Pacific at Amazon Web Services (AWS)

According to Olivier Klein, Chief Technologist in Asia Pacific at AWS, “Our approach to generative AI is to invest and innovate across 3 layers of the generative AI stack, to take this technology out of the realm of research, and to make it available to customers of any size as well as to developers of all skill levels,” he said in a recent press briefing online.

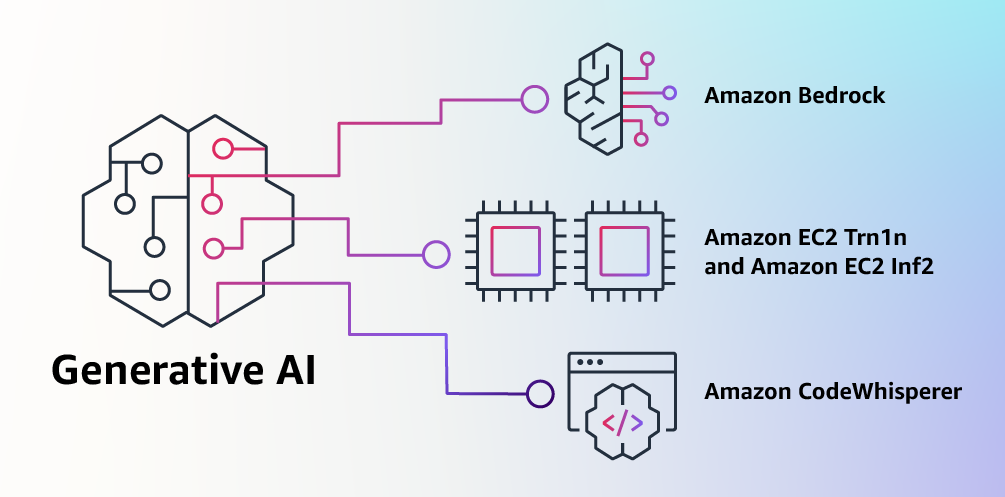

The company recently introduced four new innovations to support generative AI applications on AWS:

At the bottom layer, AWS is making generative AI more cost-efficient with best-in-class infrastructure with the general availability of Amazon EC2 Inf2 instances powered by AWS Inferentia2 chips. Inf2 instances can deliver up to 4 times higher throughput and offer up to 10 times lower latency compared to the prior generation, thus ensuring the highest performance, most energy efficiency, and lowest cost for running a demanding generative AI.

In the middle, AWS is making generative AI app development much easier with the preview launch of a new service, Amazon Bedrock, a fully managed service model that makes pre-trained foundation models (FMs) easily accessible via an API. Bedrock will also offer Amazon Titan FMs, a family of industry-leading FMs developed by AWS, to help customers find the model that’s best suited for their use case.

Then, at the top layer of the generative AI stack, is the Amazon Code Whisperer, an AI coding companion that can flag or filter code suggestions that resemble open-source training data. It can also help bypass time-consuming coding tasks and accelerate building with unfamiliar APIs.

Finally, in June, the company also announced the launch of the AWS Generative AI Innovation Center, a new program that’s been designed to help global customers successfully build and deploy generative AI solutions. AWS has since invested US$100 million in the program, which will connect AWS AI and ML experts with customers around the globe to help them envision, design, and launch new generative AI products, services, and processes.

“These new innovations,” Klein further stated, “will give customers the flexibility to choose the way they want to build their foundation models with generative AI.

Aside from making it easy, practical, and more cost-effective for customers to use generative AI in their businesses, the AWS executive also talked about the importance of digital training and upskilling people.

“In my presentation, I talked about foundation models. I also talked about how we are now investing in industry or use-case-specific generative AI-powered applications that can help you do this and that. But I believe that aside from technology, it is also important to upskill people. That’s why we continue to invest in training and certification programs — in particular, training courses that are related to generative AI.”

Klein hastens to add that the company has since trained over a hundred thousand individuals across the Philippines with cloud skills. “And that’s only since 2017,” he quipped.

Widespread adoption of ML to pave the way for reinvention of apps with generative AI

In the same media briefing, Klein also predicted the widespread adoption of machine learning and the reinvention of applications using generative AI in various use cases. “We are now seeing the next wave of widespread adoption of ML, and with it comes the opportunity for every customer experience and application to be reinvented with generative AI,” he said.

The AWS executive also stated that since Day 1, the cloud computing giant has been focused on making ML accessible to customers of all sizes and across industries. “With our industry-leading capabilities, we have helped more than 100,000 customers of all sizes and across industries to innovate using ML and AI,” he said.

Today, AWS continues to invest in not only making foundation models (FMs) and models accessible.

The company also continues to look into how customers may be allowed to run their AI programs, specifically their generative AI applications, more cost-effectively as follows:

- By building their own foundation models (FM) with purpose-built ML infrastructure;

- By leveraging pre-trained FMs as base models to build their applications; or

- By using services with built-in generative AI without requiring any specific expertise in FMs.

According to Klein, Amazon has been investing heavily in the development and deployment of both AI and ML technologies for more than 2 decades now. These investments, he further stated, are now being utilized in various use cases such as in customer-facing services as well as in internal operations, beginning with the recommendation of engines to personalize a customer’s shopping experience on Amazon.com to the AI-powered robots in company warehouses that now allow employees to optimize order fulfillment. /JMC