AMD (NASDAQ: AMD) and Oracle Cloud Infrastructure (OCI) have recently joined forces to push the boundaries of AI performance and scalability.

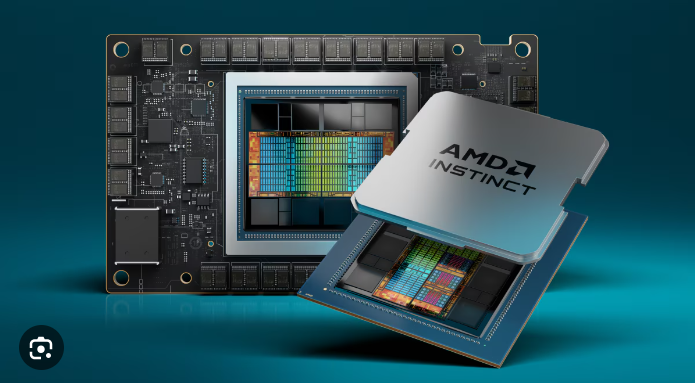

In a major leap forward for artificial intelligence (AI) infrastructure, AMD Instinct MI300X accelerators, combined with the ROCm open software ecosystem, are now powering OCI’s newest Compute Supercluster instance, BM.GPU.MI300X.8, designed to handle AI models with hundreds of billions of parameters.

This partnership brings significant advancements to large-scale AI training and inference, as the OCI Supercluster enables up to 16,384 GPUs to operate within a single cluster. With its ability to run highly demanding AI workloads, including Large Language Models (LLMs) for tasks such as inference and training, this collaboration is redefining how companies leverage cloud infrastructure to scale AI applications.

Andrew Dieckmann, corporate vice president and general manager of AMD’s Data Center GPU Business, emphasized the growing impact of this collaboration: “AMD Instinct MI300X and ROCm open software continue to gain momentum as trusted solutions for powering the most critical OCI AI workloads.” He noted that the partnership with OCI would empower customers by delivering high performance, efficiency, and flexibility in system design.

AMD: Transforming AI infrastructure with unmatched performance

The introduction of AMD Instinct MI300X within the OCI ecosystem stands as a game-changer for AI researchers and enterprises aiming to unlock the full potential of their AI models. Traditional AI workloads often face limitations due to the complexity of training and deploying models at scale.

The MI300X accelerators eliminate these bottlenecks by offering unmatched memory capacity, bandwidth, and throughput.

One of the most notable features of this collaboration is OCI’s use of bare metal instances. These instances remove the overhead typically associated with virtualized compute environments, giving AI applications direct access to raw hardware resources.

This feature, combined with the chipmaker’s cutting-edge hardware, delivers the necessary computational power to handle models at a scale that would have previously been impractical.

Donald Lu, senior vice president of software development at Oracle Cloud Infrastructure, highlighted the value this combination brings to customers: “The inference capabilities of AMD Instinct MI300X accelerators add to OCI’s extensive selection of high-performance bare metal instances to remove the overhead of virtualized compute commonly used for AI infrastructure. We are excited to offer more choices for customers seeking to accelerate AI workloads at a competitive price point.”

A boost for AI innovators: Fireworks AI’s success story

Among the early adopters of OCI’s AMD-powered infrastructure is Fireworks AI, an enterprise platform designed for building and deploying generative AI. Fireworks AI leverages over 100 models to offer tailored solutions for industries ranging from healthcare to finance.

The AMD Instinct MI300X accelerator has dramatically enhanced the company’s ability to easily handle massive workloads.

Lin Qiao, CEO of Fireworks AI, described the transformative impact of AMD’s technology on their operations: “The amount of memory capacity available on the AMD Instinct MI300X and ROCm open software allows us to scale services to our customers as models continue to grow.”

Fireworks AI’s success with the MI300X reflects the broader trend of AI becoming a critical driver of business value across various industries. The company’s ability to deliver fast and accurate AI solutions at scale is a testament to the power of the chipmaker’s hardware in supporting next-generation AI workloads.

Last September, the chipmaker also partnered with LM Studio to allow its customers to get a powerful AI assistant with document chat accelerated by AMD Ryzen AI and Radeon GPUs. LM Studio 0.3, featuring both Ryzen AI processors and Radeon graphics acceleration, has since been made available. It features a revamped user experience, easy installation and setup, and is fully equipped to help maximize customer productivity with optimal performance.

As AI models grow larger and more complex, the demand for infrastructure capable of handling these advanced computations will only increase. OCI’s adoption of AMD Instinct MI300X accelerators positions Oracle Cloud as a leader in the AI infrastructure space, providing customers with the tools needed to innovate in this rapidly evolving field.

By offering a combination of high-performance hardware, scalability, and cost-effectiveness, OCI and AMD are setting a new standard for AI infrastructure. This collaboration not only empowers AI developers but also gives organizations the flexibility to choose the best infrastructure for their unique needs—without compromising on performance.

As companies across the globe increasingly rely on AI to drive their operations, the partnership between AMD and OCI represents a critical step forward in the quest to create more efficient, scalable, and powerful AI solutions. The future of AI is bright, and with AMD’s MI300X accelerators leading the charge, the possibilities are endless.