Alibaba Cloud, the digital technology and intelligence backbone of Alibaba Group, launched two open-source large vision language models (LVLM): Qwen-VL, and its conversationally fine-tuned Qwen-VL-Chat. The models can comprehend images, texts, and bounding boxes in prompts and facilitate multi-round question answering in both English and Chinese.

Qwen-VL is the multimodal version of Qwen-7B, Alibaba Cloud’s 7-billion-parameter model of its large language model Tongyi Qianwen (also available on ModelScope as open-source).

Capable of understanding both image inputs and text prompts in English and Chinese, Qwen-VL can likewise perform various tasks such as responding to open-ended queries related to different images and generating image captions.

Alibaba Cloud’s large vision language models can understand images (IMAGE CREDIT: Shutterstock)

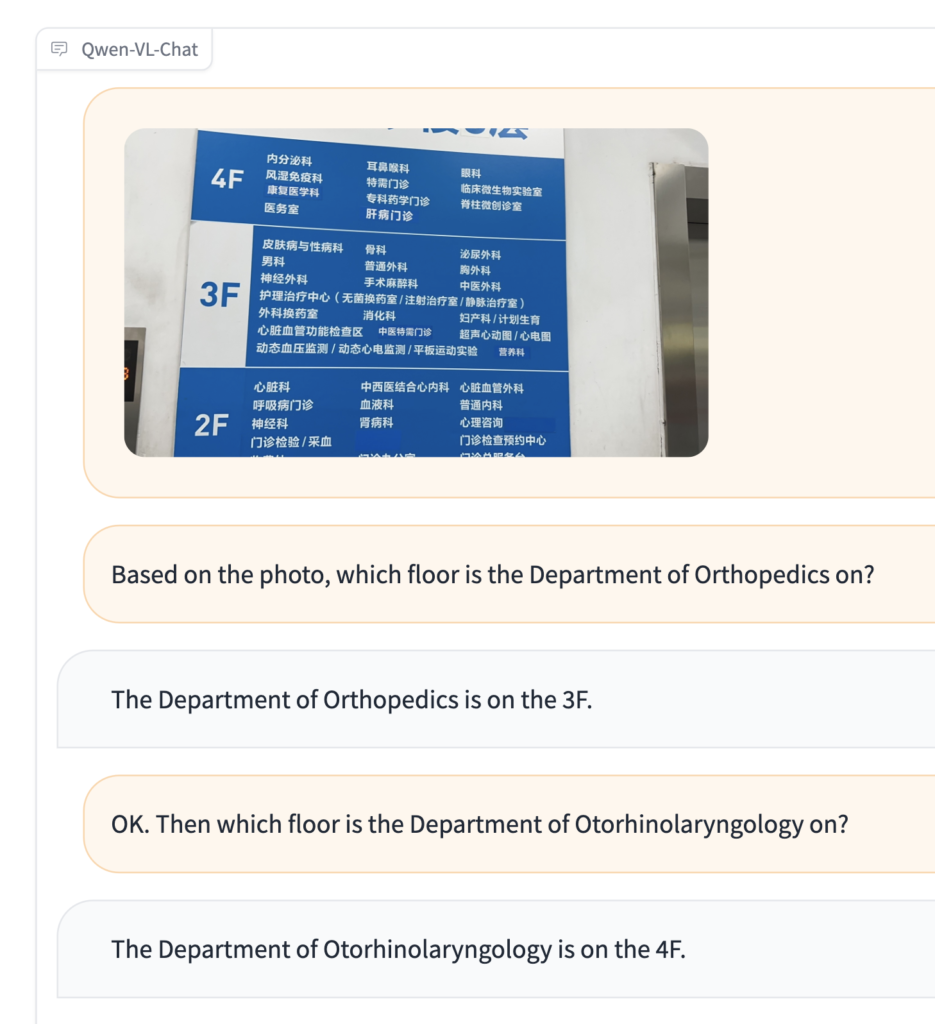

Qwen-VL-Chat caters to more complex interactions, such as comparing multiple image inputs and engaging in multi-round question answering. Leveraging alignment techniques, this AI assistant exhibits a range of creative capabilities, which include writing poetry and stories based on input images, summarizing the content of multiple pictures, and solving mathematical questions displayed in images.

Multi-round question answering via the Qwen-VL-Chat model

Contribution to open source and inclusivity

In a bid to democratize AI technologies, Alibaba Cloud has shared the model’s code, weights, and documentation with academics, researchers, and commercial institutions worldwide. This contribution to the open-source community is accessible via Alibaba’s AI model community ModelScope and the collaborative AI platform Hugging Face. Companies with over 100 million monthly active users can request a license from Alibaba Cloud for commercial use.

The introduction of these models, with their ability to extract meaning and information from images, holds the potential to revolutionize the interaction with visual content. For instance, leveraging its image comprehension and question-answering capability, the models could, in the future, provide information assistance to visually impaired individuals doing online shopping.

The Qwen-VL model was pre-trained on image and text datasets. Compared to other open-source large vision language models that can process and understand images in 224*224 resolution, Qwen-VL can handle image input at a resolution of 448*448, resulting in better image recognition and comprehension.

Based on various benchmarks, Qwen-VL recorded outstanding performance on several visual language tasks, including zero-shot captioning, general visual-question answering, text-oriented visual-question answering, and object detection.

According to the benchmark test of Alibaba Cloud, Qwen-VL-Chat has also achieved leading results in both Chinese and English for text-image dialogue and alignment levels with humans. This test involved over 300 images, 800 questions, and 27 categories.

Earlier this month, Alibaba Cloud open-sourced its 7-billion-parameter LLMs, Qwen-7B and Qwen-7B-Chat as its ongoing contribution to the open-source community. The two models have had over 400,000 downloads within a month of their launch.

For more information, check out the Alizila story here and get more details of Qwen-VL and Qwen-VL-Chat on ModelScope, HuggingFace, and GitHub pages. The model’s paper is also available here.