by Jan Michael Carpo, Reporter

Earlier this month, Meta made waves by announcing a change to its social media policies — removing its network of independent fact-checking moderators. The company, which operates Facebook, Instagram, and WhatsApp, has relied on these moderators since 2016 to curb the spread of misinformation.

But with the recent shift, Mark Zuckerberg, CEO of Meta, claims it is making this move to “restore free expression” for millions of users, suggesting that fact-checkers have stifled open dialogue by imposing their own biases.

This development comes at a time when misinformation is already rampant, with public trust in online platforms eroding daily. The news raises troubling questions about the future of social media, where disinformation runs rampant, and the forces of political and geopolitical tensions further muddy the waters.

What does this bold move mean for users on Meta’s platforms? And how does it fit into a larger, complex narrative involving other social media giants, like TikTok, caught in the crosshairs of government scrutiny and national security concerns?

In this article, we delve into Meta’s controversial decision, its potential implications for the battle against online falsehoods, and the broader challenges the social media ecosystem faces as it grapples with truth, trust, and the consequences of its own rapid evolution.

Fact-checking dilemma: Free speech or dangerous precedent?

The removal of Meta’s independent fact-checkers marks a profound shift in how the platform approaches the moderation of online content. These fact-checkers have been a crucial part of the company’s strategy to combat the spread of misinformation — especially as the platforms became breeding grounds for fake news and conspiracy theories.

Since 2016, fact-checkers, often affiliated with third-party organizations, have worked to flag, label, or remove content deemed false or misleading.

Zuckerberg argues that eliminating fact-checkers will allow users greater freedom to express themselves without the constraints of “biased” moderation.

However, many critics view this move as a dangerous retreat in the battle against misinformation. The decision to remove fact-checkers, according to critics, might appear to be a step toward greater user autonomy, but it could also pave the way for a more polluted online information environment. The claim that fact-checkers are “too politically biased” has been rejected by numerous experts in the field.

It’s not about censorship but adding context

Angie Holan, the head of the International Fact-Checking Network (IFCN), which supports and verifies the work of independent fact-checkers globally, argues that fact-checking is not about censorship but about adding context and providing clarity in the face of misleading or false claims.

“It’s a process that, when done right, helps users make informed decisions without infringing on their right to free speech,” Holan said.

The most immediate and alarming consequence of Meta’s decision may be the financial blow it deals to fact-checking organizations, particularly in regions like Africa, where Facebook is a primary source of news and information.

Many fact-checking groups rely on Meta’s funding to carry out vital work, especially in areas where misinformation can have destabilizing effects on democracy.

Without this support, the ability to combat harmful fake news in developing countries could be severely compromised, leaving citizens vulnerable to exploitation by malicious actors and political forces.

The global war on misinformation: More than just a tech issue

Meta, which has aggressively been investing in artificial intelligence to improve customer interaction, streamline services, and provide personalized content to users, made the decision to scale back its fact-checking program in January this year.

But the decision comes at a time when misinformation is at an all-time high.

From COVID-19 vaccine misinformation to election interference, falsehoods have played a pivotal role in shaping political and social outcomes across the globe. This problem isn’t confined to one region or one platform — it’s a global issue. And it’s not just a tech problem but a societal one, tied directly to issues of democracy, trust, and accountability.

As Meta steps away from its role as a content moderator, the question arises: who will fill the void? The need for a solution is urgent. Social media platforms, as the de facto news distributors for billions of users, must take responsibility for the content that circulates on their platforms.

However, recent history has shown that moderation — especially when done by independent fact-checkers — is a precarious and controversial process. Removing the fact-checking mechanism could further fuel the rapid spread of misinformation, which may in turn erode public trust in the platform and its ability to maintain a responsible, informed community.

In a world where anyone with a smartphone can become both a publisher and a consumer of news, the fight against misinformation demands innovation.

Perhaps new models of fact-checking — either crowdsourced or algorithmic — can help fill the gap left by Meta’s decision. But until such alternatives are fully developed, the responsibility will likely fall on users to be more discerning, critical, and cautious when consuming information. Developing critical thinking skills and relying on credible, authoritative sources will be key in navigating the online world moving forward.

TikTok: National security concerns and cultural power struggle

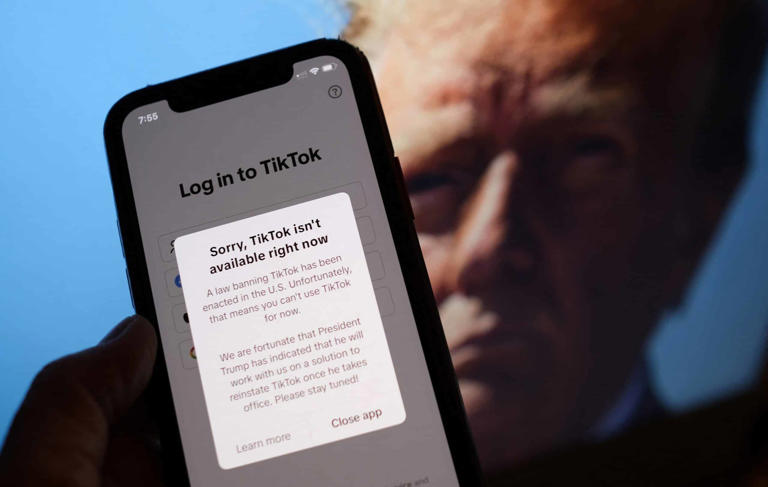

While Meta’s announcement has dominated the headlines, another major social media player is caught in a different but equally fraught geopolitical battle. TikTok, the viral video-sharing app with over 1 billion active users, has faced mounting scrutiny in several countries, particularly the United States.

The app, owned by the Chinese company ByteDance, has raised serious concerns over national security risks — specifically the potential for the Chinese government to access data collected by TikTok and use it to influence political and social dynamics in foreign countries.

The political wrangling over TikTok’s future has been ongoing for years, with the Trump administration attempting to ban the app, and the previous administration of Biden pushing for a forced sale of TikTok’s U.S. operations to a domestic company.

These debates underscore the tension between the economic and cultural impact of TikTok — an app that has reshaped entertainment, marketing, and communication — and the potential risks it poses to national security in a geopolitically fraught era.

While Meta and TikTok are often seen as competitors, they share a common challenge: balancing the interests of governments with those of users. Both platforms are facing increasing calls for regulation, whether it’s over the spread of misinformation, user data privacy, or foreign influence in national affairs.

The outcome of these debates will shape the future of the social media landscape and influence how we, as global citizens, engage with technology.

A call to action: Restoring trust in the digital age

The recent shifts in Meta’s moderation policies and the ongoing controversy surrounding TikTok signal a deeper crisis in the digital world.

With misinformation running rampant and trust in social media platforms eroding, the need for a thoughtful, multifaceted approach to regulation and moderation has never been clearer.

Platforms like Meta and TikTok must do more to protect users from false and harmful information. Users, for their part, need to cultivate the skills to critically assess the information they consume.

Governments, too, have a critical role to play. Clear, transparent regulations that address misinformation, data privacy, and national security concerns without stifling free speech are essential in maintaining the integrity of the digital age.

In the end, the future of online truth shall depend on each one of us — platforms, governments, and the users alike — working together to help preserve the digital ecosystem as a force for good while fostering connection, creativity, and informed discourse in an increasingly complex world.